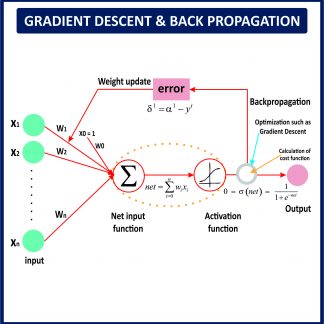

Gradient Descent & Back Propagation

₹600.00

Gradient descent is an optimization algorithm used to minimize some function by iteratively moving in the direction of steepest descent as defined by the negative of the gradient. In machine learning, we use gradient descent to update the parameters of our model. Parameters refer to coefficients in Linear Regression and weights in neural networks.

This excel workbook contains two excel sheets – one explaining the gradient descent algorithm using a simple example and one using an advance example. The excel itself explains all the steps used in modelling.

Reviews

There are no reviews yet.